RAG Q&A Chatbot using OpenAI, LangChain, ChromaDB and Gradio

Retrieval-Augmented Generation(RAG) emerges as a promising approach that handles the limitations of Large Language Models(LLMs) mainly hallucinating information and inconsistent outputs. Using RAG, we can give the model access to specific information that can be used by the model as context to generate responses that are more factually correct and more consistent.

Let’s take a look at the RAG pipeline.

The process begins with a data source which can be text data such as PDFs that are loaded and stored as documents. During preprocessing, the documents are segmented or chunked into pieces that align with the model’s context window.

The segmented documents are fed into an embedding model, which generates vector representations of the text. These vectors are then saved in a vector database that can be used during the generation process.

Upon receiving a user query, the system employs the same embedding model to transform the query into a vector representation. This vector is then used to search the vector database for entries containing similar vector representations, indicating potentially relevant information.

The retrieval step in RAG essentially identifies relevant information from the vector database. We will talk more about this step as this forms an essential component in RAG.

The retrieved information becomes the context that provides the LLM with additional knowledge relevant to the user’s query. Finally, the original query is augmented, or enriched, with this context before being fed to the LLM for response generation, which ensures that the output is factually more correct.

What is Gradio?

Gradio is an open source Python library that simplifies the process of creating user interfaces for ML models, APIs, etc. In just a few lines of code, we can build a web interface that allows people to interact with the model.

Let’s get our hands dirty and start building a Q&A chatbot using RAG capabilities.

The first step is to import all necessary dependencies.

import gradio as gr

import os

from langchain_community.document_loaders import PyPDFLoader

from langchain.text_splitter import RecursiveCharacterTextSplitter

from langchain.embeddings import HuggingFaceEmbeddings

from langchain.chat_models import ChatOpenAI

from langchain.retrievers.document_compressors import LLMChainExtractor

from langchain.retrievers.multi_query import MultiQueryRetriever

from langchain.retrievers import ContextualCompressionRetriever

from langchain.prompts.chat import ChatPromptTemplate, HumanMessagePromptTemplate

from langchain.vectorstores import ChromaWe will discuss about all the dependencies as we move forward.

We would need to have the OpenAI API key to call the ChatOpenAI model. You can get it in their website.

Let’s create an instance of the chat model. Also let’s define the embedding function that we will be using to store the vector representations in the vector store.

BGE models on HuggingFaceare one of the best open source embedding models.

chat = ChatOpenAI()

embedding_function = HuggingFaceEmbeddings(model_name = "BAAI/bge-large-en-v1.5",model_kwargs={'device': 'cpu'},encode_kwargs={"normalize_embeddings": True})Now let’s define a function add_docs() that would take in a file(say a PDF), load and split it using RecursiveCharacterTextSplitter with chunk size 500 and chunk overlap 100. Then it would embed the documents using the embedding function and store it in Chroma VectorDB.

We will be using the same database during generation.

def add_docs(path):

loader = PyPDFLoader(file_path=path)

docs = loader.load_and_split(text_splitter=RecursiveCharacterTextSplitter(chunk_size = 500,

chunk_overlap = 100,

length_function = len,

is_separator_regex=False))

model_vectorstore = Chroma

db = model_vectorstore.from_documents(documents=docs,embedding= embedding_function, persist_directory="output/general_knowledge")

return dbRetrieval Process

For the retrieval process, we will use a combination of Contextual Compressor and Multi Query Retriever. I have discussed about these in my article.

We will define a function that first loads the vector store. It takes in the query from the user, creates the context using the query, augments the context to the prompt and generates a response. Since we are creating a chatbot, we also append the response to the chat history.

We use prompting techniques in LangChain to create the prompt templates for generation.

def answer_query(message, chat_history):

base_compressor = LLMChainExtractor.from_llm(chat)

db = Chroma(persist_directory = "output/general_knowledge", embedding_function=embedding_function)

base_retriever = db.as_retriever()

mq_retriever = MultiQueryRetriever.from_llm(retriever = base_retriever, llm=chat)

compression_retriever = ContextualCompressionRetriever(base_compressor=base_compressor, base_retriever=mq_retriever)

matched_docs = compression_retriever.get_relevant_documents(query = message)

context = ""

for doc in matched_docs:

page_content = doc.page_content

context+=page_content

context += "\n\n"

template = """

Answer the following question only by using the context given below in the triple backticks, do not use any other information to answer the question.

If you can't answer the given question with the given context, you can return an emtpy string ('')

Context: ```{context}```

----------------------------

Question: {query}

----------------------------

Answer: """

human_message_prompt = HumanMessagePromptTemplate.from_template(template=template)

chat_prompt = ChatPromptTemplate.from_messages([human_message_prompt])

prompt = chat_prompt.format_prompt(query = message, context = context)

response = chat(messages=prompt.to_messages()).content

chat_history.append((message,response))

return "", chat_historyWith all done, let’s define a function that creates the UI where in we first upload a file. We also create a textbox wherein the user enters a query and finally the answer_query() function which returns the response and adds it to the chat history.

with gr.Blocks() as demo:

gr.HTML("<h1 align = 'center'>Smart Assistant</h1>")

with gr.Row():

upload_files = gr.File(label = 'Upload a PDF',file_types=['.pdf'],file_count='single')

chatbot = gr.Chatbot()

msg = gr.Textbox(label = "Enter your question here")

upload_files.upload(add_docs,upload_files)

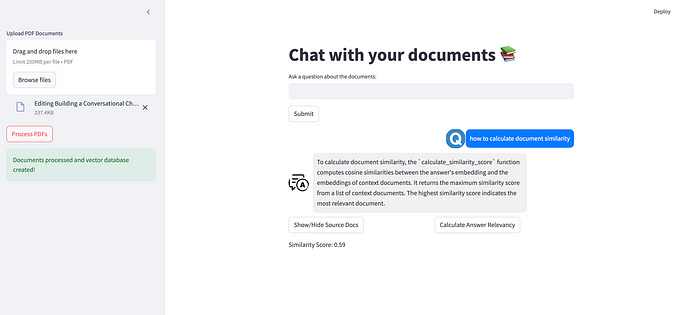

msg.submit(answer_query,[msg,chatbot],[msg,chatbot])After testing this, we can deploy it to Spaces by HuggingFace. We first create a Space and next we need to upload two files: app.pyand requirements.txtin the files section of the space. Now, if the build gets successful, we will have our interface ready.

This is how the interface looks like.

You can try out the app here:

I have added all the scripts here.